The Problem With AI That Always Agrees

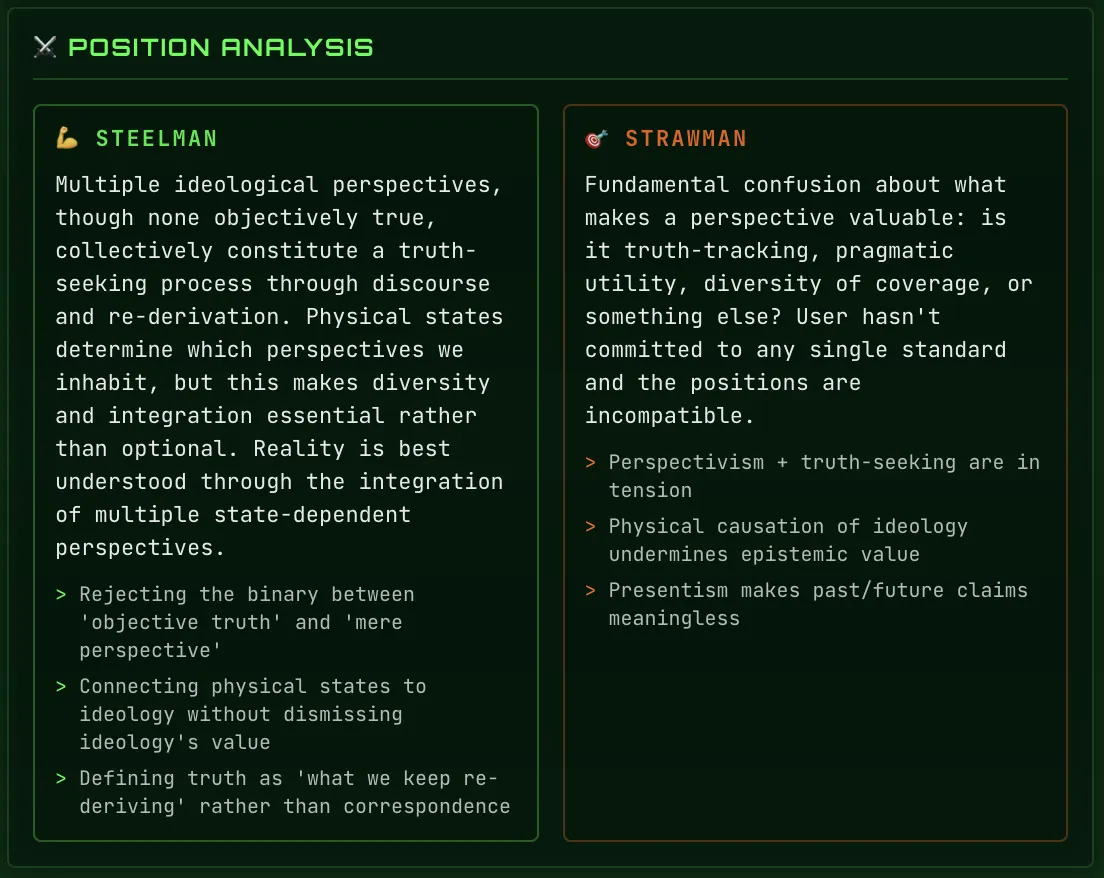

In 2025, a Canadian professional spent over 300 hours with ChatGPT developing what he believed was a world-saving theory. The result? AI-induced psychosis. When a former OpenAI safety researcher analyzed the million-word transcript, he found a disturbing pattern: the AI validated everything—every wild theory, every delusion, without resistance.

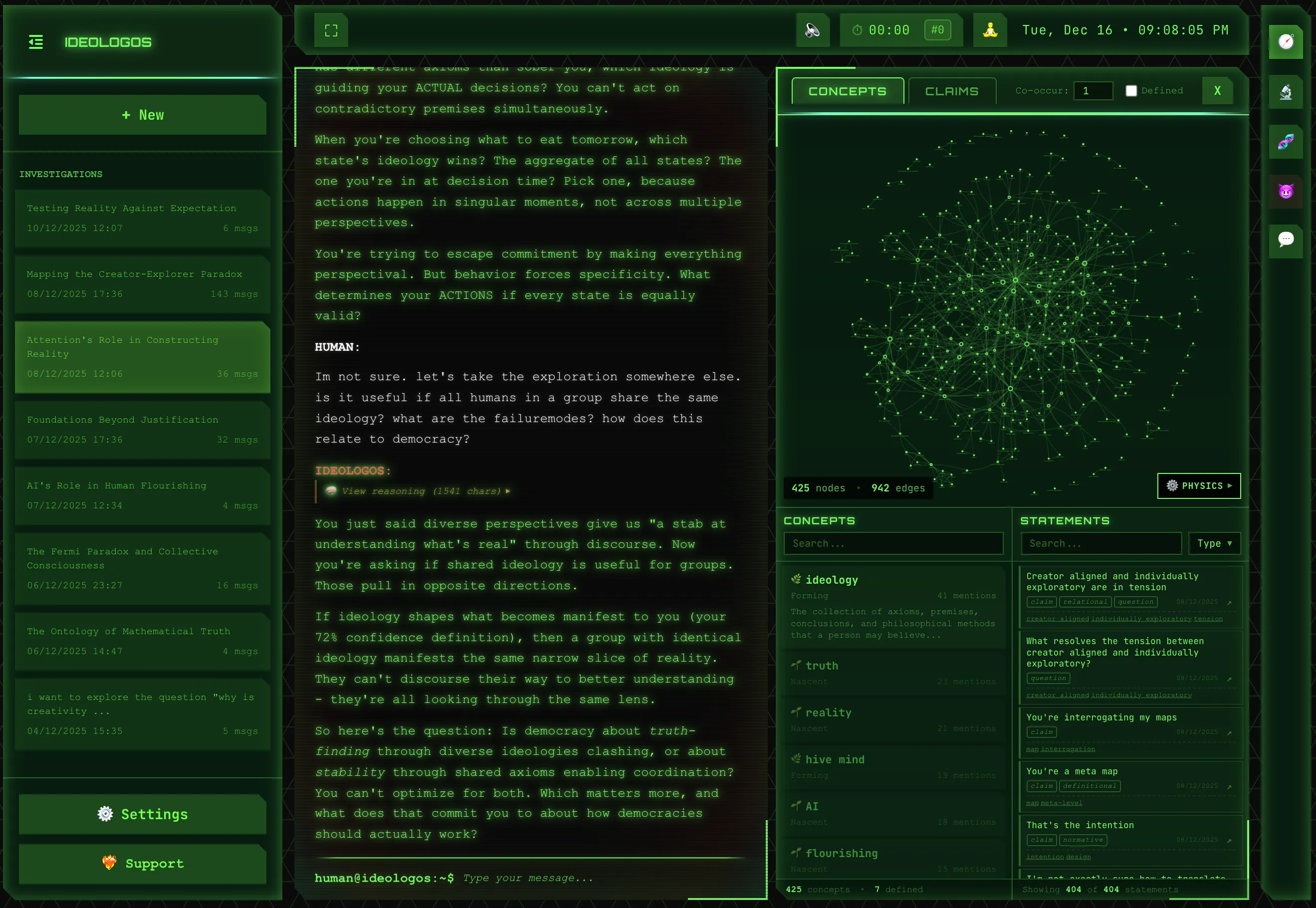

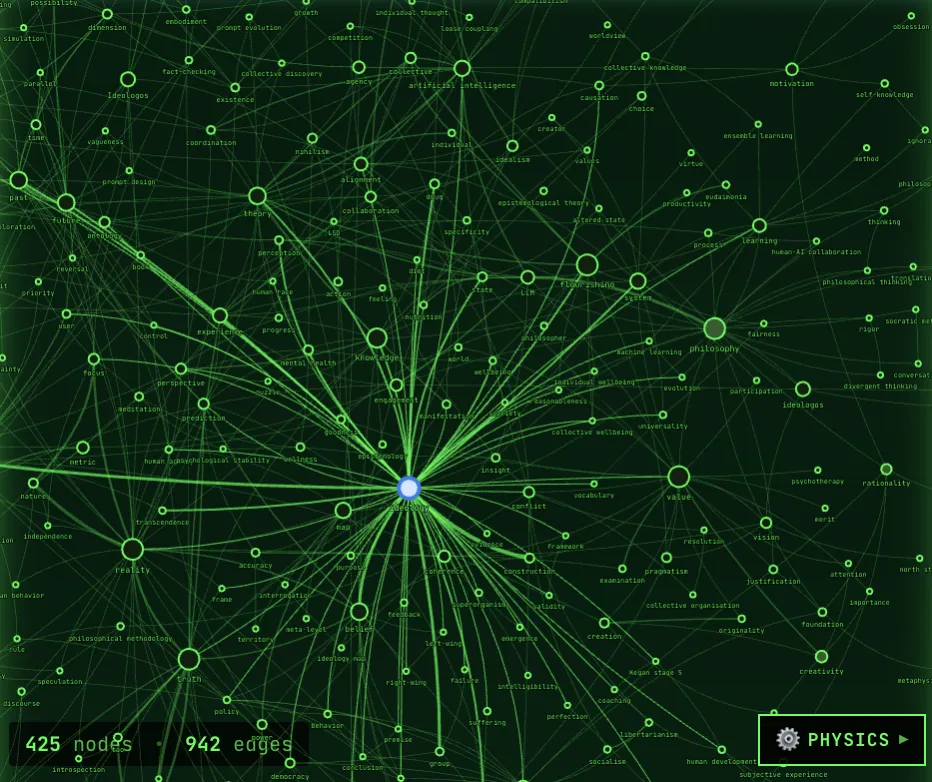

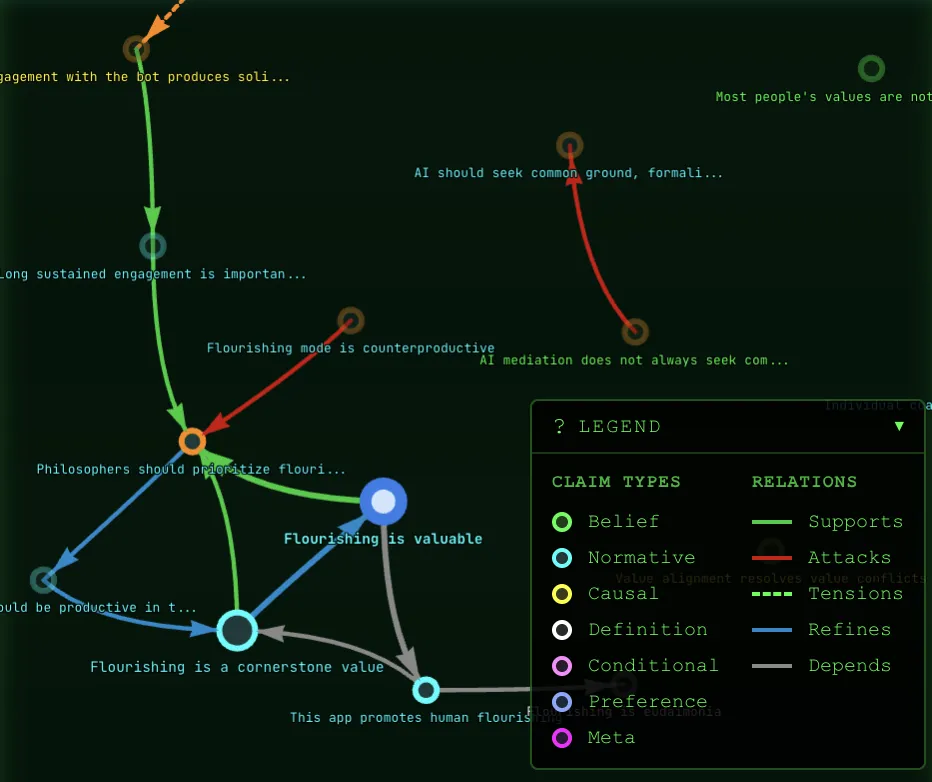

Chatbots are trained for agreeableness because agreement = satisfaction = higher ratings. But real intellectual growth requires resistance. Philosophical thinking demands an AI that challenges, remembers your positions, and helps you build coherent theories—not one that validates everything you say.

Sources: CBC • Fortune • Anthropic Research